As we enter the age of quantum computing, a fundamental question arises: what sets a quantum bit—or qubit—apart from a classical bit? While both serve as the basic units of information in their respective paradigms, their operational principles and implications are radically different. Understanding these differences is crucial to grasping the potential and challenges of quantum computing.

Classical Bits: The Foundation of Traditional Computing

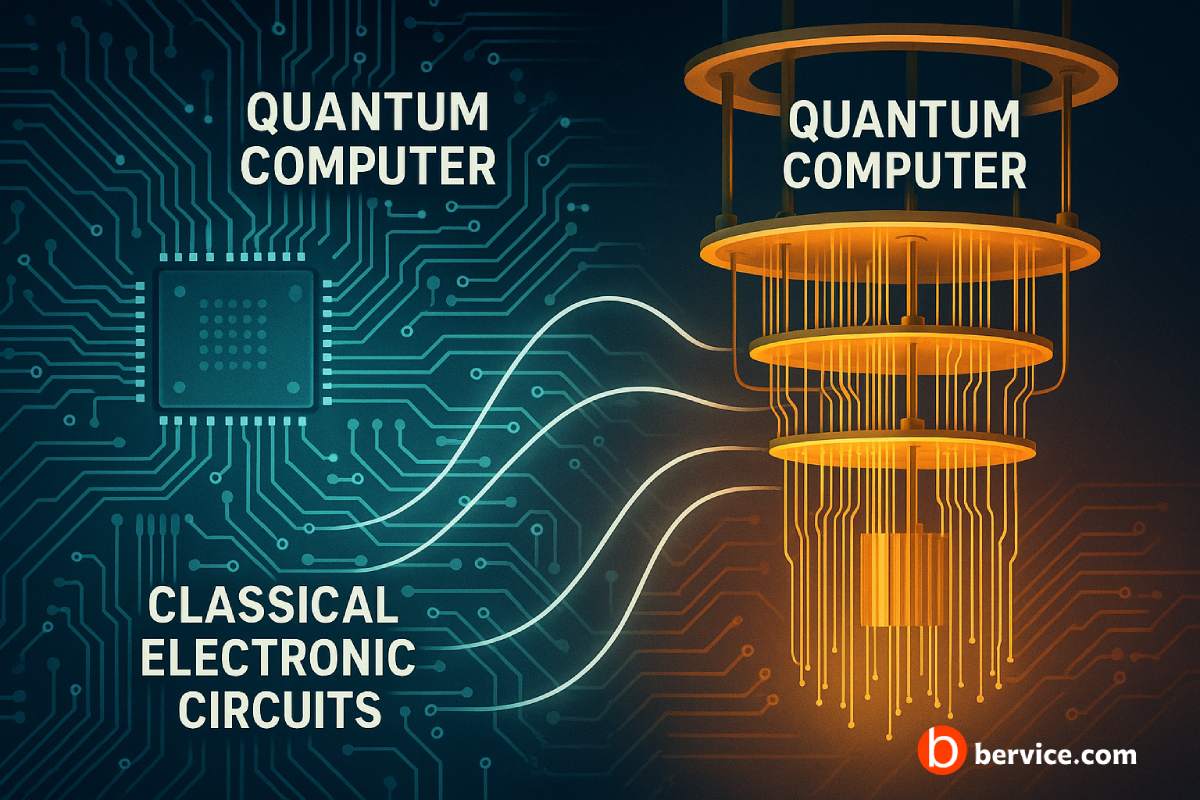

In classical computing, all information is encoded using bits. A bit is a binary unit that can exist in one of two distinct states: 0 or 1. These bits are manipulated using logical gates, forming the basis of everything from calculators to supercomputers. The deterministic nature of classical bits means that at any given time, each bit has a definite value—either on (1) or off (0).

This binary model is reliable, predictable, and has fueled the technological revolution for decades. However, it’s fundamentally limited by its inability to represent or process multiple states simultaneously.

Quantum Bits (Qubits): Harnessing Superposition and Entanglement

Qubits, the building blocks of quantum computing, operate under the laws of quantum mechanics. Unlike classical bits, a qubit can exist not only in a state of 0 or 1 but also in a superposition of both. This means a qubit can simultaneously be 0, 1, or any quantum combination of these states until it is measured.

This capability allows quantum computers to process a vast number of possibilities in parallel. For example, while 3 classical bits can represent one of 8 (2³) possible states at a time, 3 qubits can represent all 8 states at once thanks to superposition.

Another critical feature of qubits is entanglement, a quantum phenomenon where the state of one qubit is directly related to the state of another, regardless of distance. This leads to correlations between qubits that can be leveraged for more complex and faster computation.

Key Differences Between Bits and Qubits

- State Representation:

- Bits: Only 0 or 1 at any time

- Qubits: Can be in a superposition of 0 and 1

- Processing Power:

- Bits: Scale linearly

- Qubits: Scale exponentially in potential parallelism

- Entanglement:

- Bits: Independent of one another

- Qubits: Can be entangled to exhibit non-local correlations

- Measurement:

- Bits: Directly observed

- Qubits: Collapse to 0 or 1 upon measurement, losing superposition

- Error Sensitivity:

- Bits: Relatively stable and easy to correct

- Qubits: Extremely sensitive to environmental noise and harder to stabilize

Why This Matters

The transition from bits to qubits is not just a technical upgrade—it’s a paradigm shift. Quantum computers, using qubits, have the theoretical potential to solve problems in seconds that would take classical supercomputers millions of years. This includes areas such as cryptography, materials science, complex optimization, and quantum simulations.

However, this power comes with immense technical challenges. Maintaining qubits in stable superposition and entangled states requires extremely low temperatures and isolation from noise, making quantum computers currently experimental and resource-intensive.

Conclusion

While bits remain the cornerstone of today’s digital world, qubits represent the future of computation. Their ability to exist in multiple states simultaneously and interact through entanglement opens the door to revolutionary advances—but also introduces new engineering, physical, and philosophical questions. Understanding the difference between bits and qubits is not just about learning terminology—it’s about preparing for the next wave of technological evolution.

Connect with us : https://linktr.ee/bervice