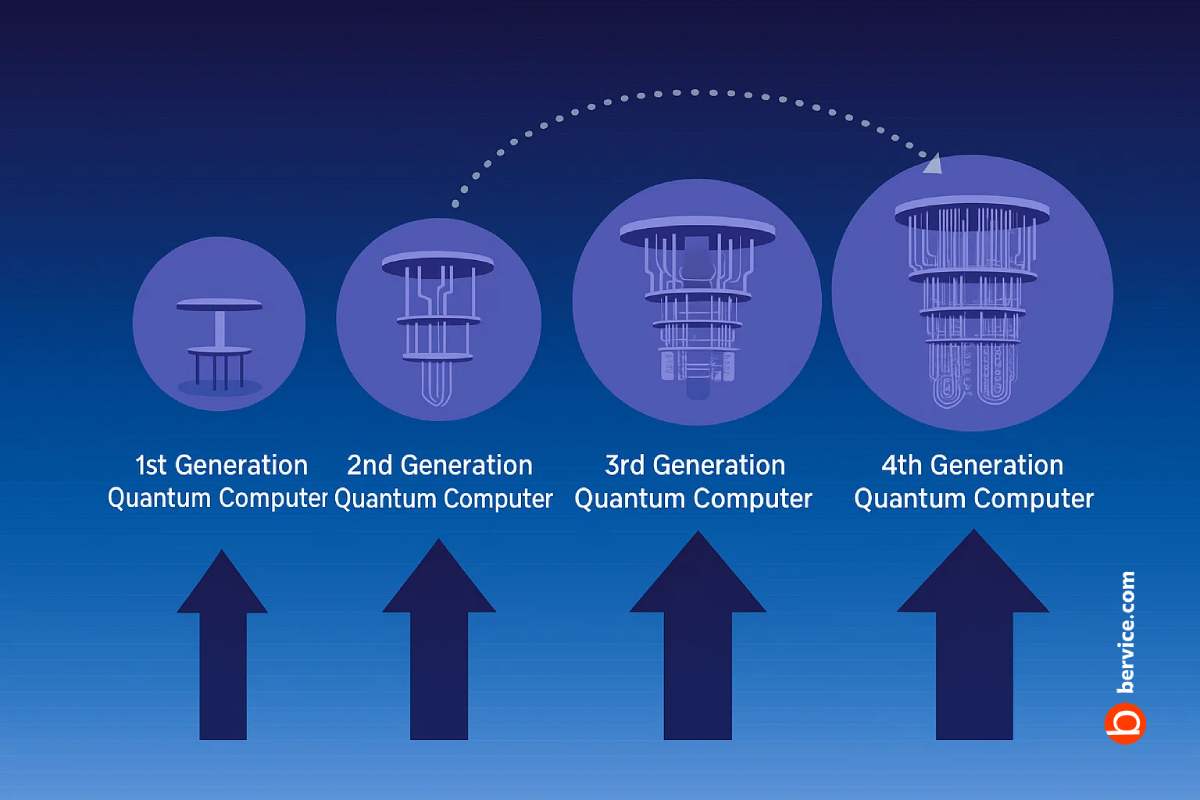

Quantum computing is emerging as one of the most transformative technologies of the 21st century. Just as classical computing has evolved through multiple generations—from vacuum tubes to transistors to integrated circuits—quantum computers are also developing in successive waves of innovation. Each generation represents improvements in stability, scalability, and computational efficiency, moving closer to practical, fault-tolerant quantum computing.

First Generation: Noisy Intermediate-Scale Quantum (NISQ) Computers

The first generation of quantum computers, often referred to as NISQ devices, are the machines we see today from companies like IBM, Google, and Rigetti. These systems operate with a limited number of qubits (tens to a few hundred) and suffer from noise, decoherence, and error rates that make large-scale computations unreliable. Their main purpose is experimental—testing algorithms, exploring optimization problems, and advancing research. While their performance is not yet superior to classical supercomputers for most tasks, they are crucial for demonstrating quantum advantage in very specific applications, such as quantum chemistry simulations and combinatorial optimization.

Second Generation: Error-Corrected Quantum Computers

The next step is fault-tolerant, error-corrected quantum computers. This generation will use error correction codes to manage the fragile state of qubits, enabling much longer and more reliable computations. Instead of just hundreds of physical qubits, these machines will require millions of qubits to build thousands of logical qubits—error-protected units that can sustain calculations over extended time periods. The performance leap here is massive: once reliable error correction is achieved, these systems will outperform classical supercomputers in fields like drug discovery, cryptography, and financial modeling.

Third Generation: Scalable, Modular, and Distributed Quantum Computers

The third generation envisions scalable and interconnected quantum systems. Unlike the early prototypes that function in isolation, these computers will use modular designs and quantum networks to interlink multiple processors, creating distributed quantum computing environments. This approach enhances scalability, as it avoids the physical limitations of fitting all qubits into a single machine. In terms of performance, distributed quantum computers will allow unprecedented parallelism and the tackling of massive real-world problems across industries such as logistics, climate modeling, and AI training.

Fourth Generation: Quantum-Classical Hybrid Supercomputers

The most advanced future vision is the hybrid generation, where quantum processors seamlessly integrate with classical high-performance computing (HPC) systems. Instead of replacing classical computers, they will augment them—handling specialized quantum tasks like factorization, quantum simulation, or optimization, while classical processors manage deterministic, large-scale data handling. The performance difference here is revolutionary: a well-integrated hybrid system could unlock exponential speed-ups for problems that are practically impossible today, bridging the gap between abstract quantum theory and large-scale industrial solutions.

Key Performance Differences Across Generations

- NISQ Era: Limited scale, noisy results, mainly research and proof-of-concept.

- Error-Corrected Era: Reliable, long-duration computations; real-world applications like cryptography and pharmaceuticals.

- Modular/Distributed Era: Scalable to millions of qubits, solving industrial-scale challenges.

- Hybrid Era: True fusion of quantum and classical computing, offering exponential performance boosts across science, finance, and AI.

Connect with us : https://linktr.ee/bervice