Introduction

Quantum computing, once a theoretical curiosity rooted in the strange laws of quantum mechanics, has evolved into one of the most promising frontiers in science and technology. Unlike classical computers that process information in bits (0s and 1s), quantum computers use qubits—quantum bits that leverage superposition and entanglement to perform complex computations exponentially faster in some cases. But how did this revolutionary concept develop? This article traces the fascinating journey of quantum computing, from its theoretical origins to its current experimental breakthroughs.

Theoretical Foundations: 1980s

The seeds of quantum computing were planted in the early 1980s when physicist Richard Feynman pointed out a fundamental problem: classical computers struggle to simulate quantum systems efficiently. In his seminal 1981 lecture at MIT, Feynman proposed that only a quantum system could effectively simulate another quantum system—laying the groundwork for the field.

Shortly thereafter, David Deutsch at the University of Oxford formalized the idea by introducing the universal quantum computer in 1985. Deutsch’s model extended the classical Turing machine to operate with quantum principles, providing a theoretical blueprint for what would become the quantum computer.

Early Algorithms and Momentum: 1990s

The 1990s marked a turning point when quantum computing transitioned from theory to possibility. In 1994, Peter Shor developed the Shor’s algorithm, capable of factoring large numbers exponentially faster than the best-known classical algorithms—a potential threat to RSA encryption. Around the same time, Lov Grover introduced Grover’s algorithm in 1996, offering a quadratic speedup for database searches.

These groundbreaking algorithms proved that quantum computers, if built, could outperform classical machines in specific domains. Consequently, governments and academic institutions worldwide began investing in quantum computing research, sensing its strategic and scientific potential.

Experimental Advances: 2000s

With theoretical models in place, the 2000s saw rapid strides in experimental efforts. Researchers built rudimentary quantum systems using ion traps, superconducting circuits, and quantum dots. In 2001, IBM and Stanford University developed a 7-qubit quantum computer using nuclear magnetic resonance (NMR), successfully running a simplified version of Shor’s algorithm.

Despite these breakthroughs, scalability remained a formidable challenge. Quantum decoherence—where qubits lose their quantum state due to environmental noise—posed a critical obstacle, limiting the size and reliability of quantum systems.

The Quantum Race: 2010s

The 2010s ushered in what many call the “Quantum Race,” as tech giants, startups, and national governments accelerated development. Google, IBM, Intel, and Rigetti began building increasingly powerful superconducting qubit processors.

In 2019, Google announced “quantum supremacy,” claiming that its 53-qubit Sycamore processor performed a specific task in 200 seconds that would take a supercomputer 10,000 years. Although the practical significance of the task was limited, the event was a historic milestone.

Meanwhile, companies like D-Wave promoted quantum annealing systems for optimization problems, and IBM launched cloud-based quantum computers for public experimentation via the IBM Quantum Experience platform.

Toward Fault Tolerance and Practical Use: 2020s

As of the 2020s, the field is moving toward error correction, fault tolerance, and quantum advantage in real-world applications. Organizations such as QuEra, IonQ, and PsiQuantum are exploring alternative qubit technologies like photonics and neutral atoms.

In parallel, quantum software ecosystems are maturing. Open-source frameworks like Qiskit (IBM), Cirq (Google), and PennyLane (Xanadu) are enabling developers and researchers to experiment with algorithms, simulate quantum circuits, and develop hybrid quantum-classical workflows.

Governments are also establishing national quantum strategies. The U.S. National Quantum Initiative Act, the EU Quantum Flagship, and China’s multibillion-dollar investments underscore the growing geopolitical and economic stakes.

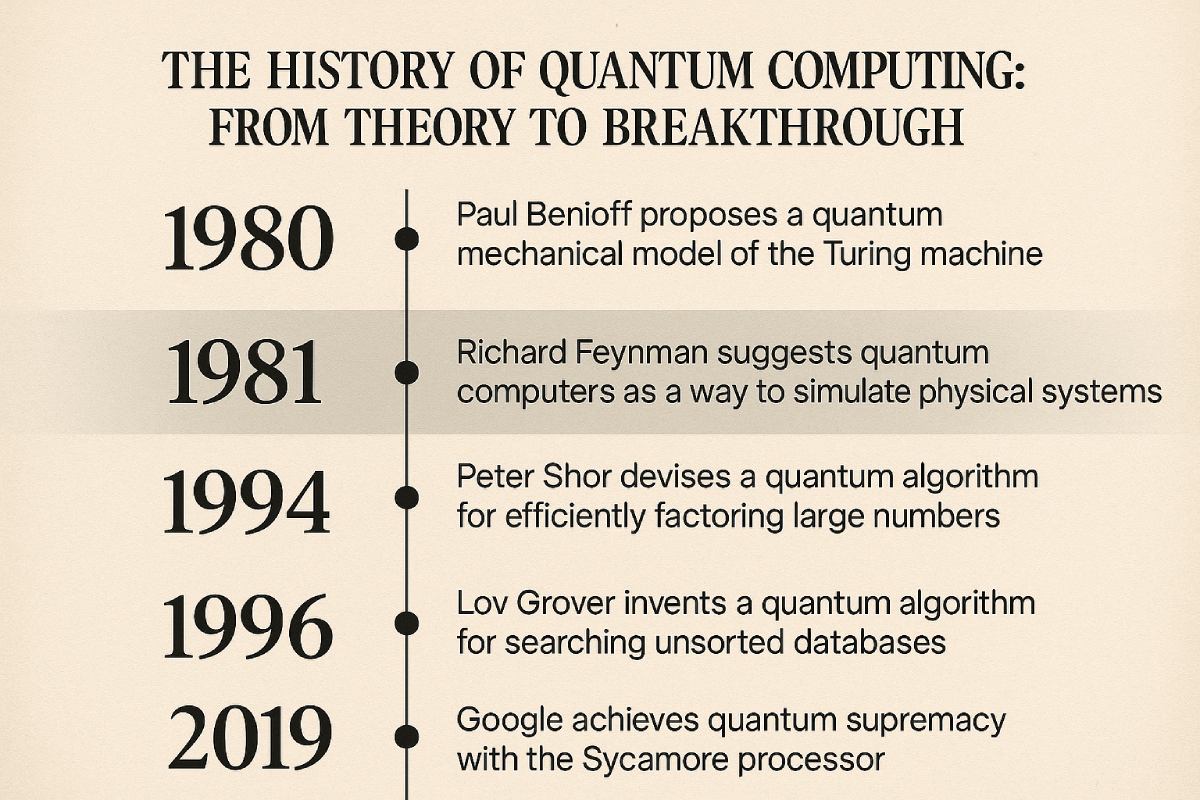

Timeline Overview (Infographic Idea)

| Year | Milestone |

|---|---|

| 1981 | Feynman proposes quantum simulation |

| 1985 | Deutsch introduces universal quantum computer |

| 1994 | Shor’s algorithm shakes cryptography |

| 1996 | Grover’s algorithm speeds up search problems |

| 2001 | IBM & Stanford build 7-qubit quantum system |

| 2019 | Google claims quantum supremacy |

| 2023+ | Focus shifts to fault-tolerant quantum computing |

Conclusion

The history of quantum computing is a narrative of imagination, persistence, and radical science. What began as a theoretical response to the limits of classical computation has grown into a global scientific movement, reshaping how we understand information and computation. While practical quantum computing remains on the horizon, its history is a powerful testament to human ingenuity—and its future could transform everything from cybersecurity to medicine, materials science to artificial intelligence.

Connect with us : https://linktr.ee/bervice